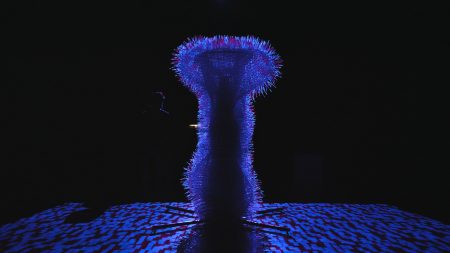

Doing Nothing with AI 2.0

eine neuroreaktive Robotik-Installation

Emanuel Gollob: concept, design, research

Magdalena May: concept, research

Conny Zenk: visual art

Veronika Mayer: sound art

July 2020 @ Reaktor, Vienna

watch on vimeo: Doing Nothing with AI 2.0

“Doing Nothing with AI 2.0” is a robotic art installation that uses generative robotic control, EEG measurements, and a GAN machine learning model to optimize its parametric movement, sound, and visuals with the aim to make the spectator Do Nothing.

In times of constant busyness, technological overload, and the demand for permanent receptivity to information, doing nothing is not much accepted, often seen as provocative and associated with wasting time. People seem to always be in a rush, stuffing their calendars, seeking distraction and the subjective feeling of control, unable to tolerate even short periods of inactivity.

The multidisciplinary project “Doing Nothing with AI” intends to address the common misconception of confusing busyness with productivity or even effectiveness. Taking a closer look, there is not too much substance in checking our emails every ten minutes or doing some unfocused screen scrolling whenever there is a five minutes wait at the subway station. Enjoying a moment of inaction and introspection while letting our minds wander and daydream may be more productive than constantly keeping us busy with doing something.

Its adaptive aesthetic strategy demands an aesthetic in the flux. The resulting physical aesthetic experience is not just an optimized static aesthetic but rather an embedded aesthetic interaction. None of the interactants controls the other, but both entities are acting, perceiving, learning, and reacting in a non-hierarchical setting.

“Doing Nothing with AI 2.0” is animated by a Generative Adversarial Networks (GAN) and a Kuka Robot KR6 R900. Using the real-time robot control system mxAutomation together with the Grasshopper plugin “Kuka|PRC” and MaxMsp creates a space of 255 by the power of 256 possible possible robotic movements, sound and visual combinations.

Every time a spectator puts on the EEG device, the GAN generates a choreography based on collected data from previous spectators. After 30 seconds of EEG feedback, the current choreography gets evaluated. If it did bring the spectator closer to a state of doing nothing, the recent choreography gets saved and slightly mutated. If it didn’t get the spectator closer to doing nothing, the GAN generates the next choreography.

For public exhibitions, I use a Muse 2016 EEG headband measuring the relative change of alpha and beta waves at the prefrontal cortex.

For more advanced settings, I use an Enobio 8 EEG cap together with a source localization.

Text: Emanuel Gollob